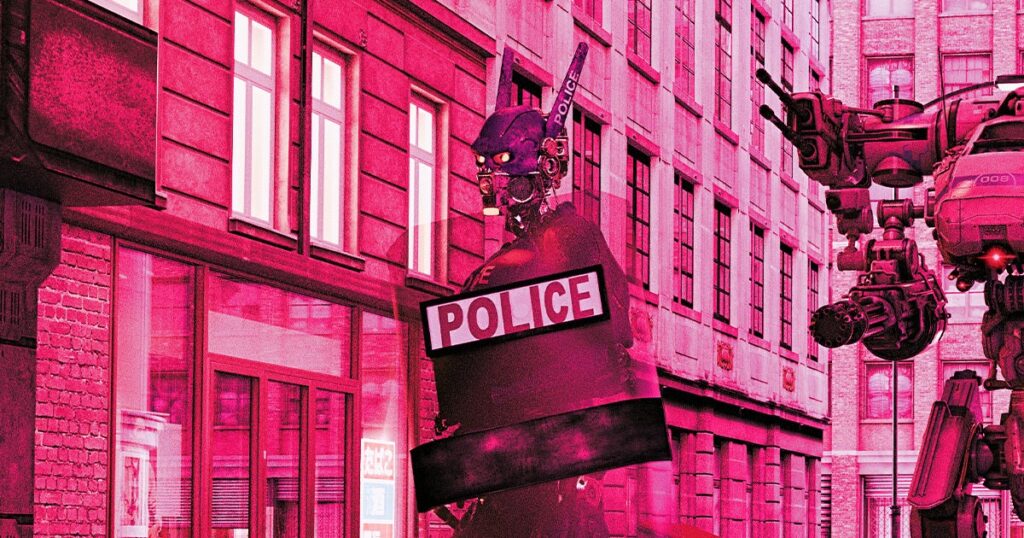

Bot crimes

A police department in the United Kingdom is testing an AI-powered system that could potentially help solve cold cases by condensing decades of detective work into just a few hours. Sky News reports.

But there’s no word yet on the accuracy of this Australian-developed platform, called Soze, which is a major concern because AI models tend to spit out very incorrect results or hallucinate made-up information.

Avon and Somerset Police, which covers parts of south-west England, are testing the program by having Soze scan and analyze emails, social media accounts, video, financial statements and other documents.

Air reports that the AI was able to scan evidence from 27 ‘complex’ cases in about 30 hours – the equivalent of 81 years of human work, which is staggering. No wonder this police department is interested in using this program, as these numbers sound like a force multiplier on steroids – making it especially attractive to law enforcement agencies that may be limited in terms of staffing and budget constraints.

“Maybe you have a cold case review that just seems impossible because of the amount of material that’s out there, and you feed that into a system like this that can just process it and then give you an assessment of it,” he said. the British National Police Chiefs’ Council. Gavin Stephens told it Air. “I can see this being really helpful.”

Minority report

Another AI project Stephens referred to is compiling a database of knives and swords many suspects have used to attack, maim or kill victims in the United Kingdom.

Stephens seems optimistic about the soon rollout of these AI tools, but it would make sense to first validate that they work well. AI, perhaps especially in the field of law enforcement, is notoriously error-prone can lead to false positives.

One model that was used to predict a suspect’s chances of committing another crime in the future was inaccurate and also biased against black people – which sounds like something straight out of Philip K. Dick’s novella ‘Minority Report’, later adapted into the 2002 Steven Spielberg film. AI facial recognition could also lead to false arrestswhere minorities are repeatedly punished for crimes they did not commit.

These inaccuracies are so concerning that the U.S. Commission on Civil Rights has done so recently criticized the use of AI in the police.

There is a perception that because these are machines that perform analytics, they will be infallible and accurate. But they are built on data collected by people, whoever that might be biased and downright wrongso that known problems are baked in from the start.

More about AI and policing: Police say hallucinogenic AIs are ready to write police reports that could send people to jail